Installation

Design Museum Ghent commisioned an installation based on my earlier work I did with the collection. I create work with "found data" but a large part of this project was creating new data in relation to the starting data.

More info on the data and algorithms for this project can be found here. The installation was both an explorer for the collection as a creative tool. It also showcased the result of a livecoded visuals (hydra) workshop I teached at mutationfestival. The installation was build around the everlasting question:

CAN ARTIFICIAL INTELLIGENCE DESIGN A CHAIR?

Are machines capable of understanding the intricacies of design? And does "more data" actually generate "better results"?

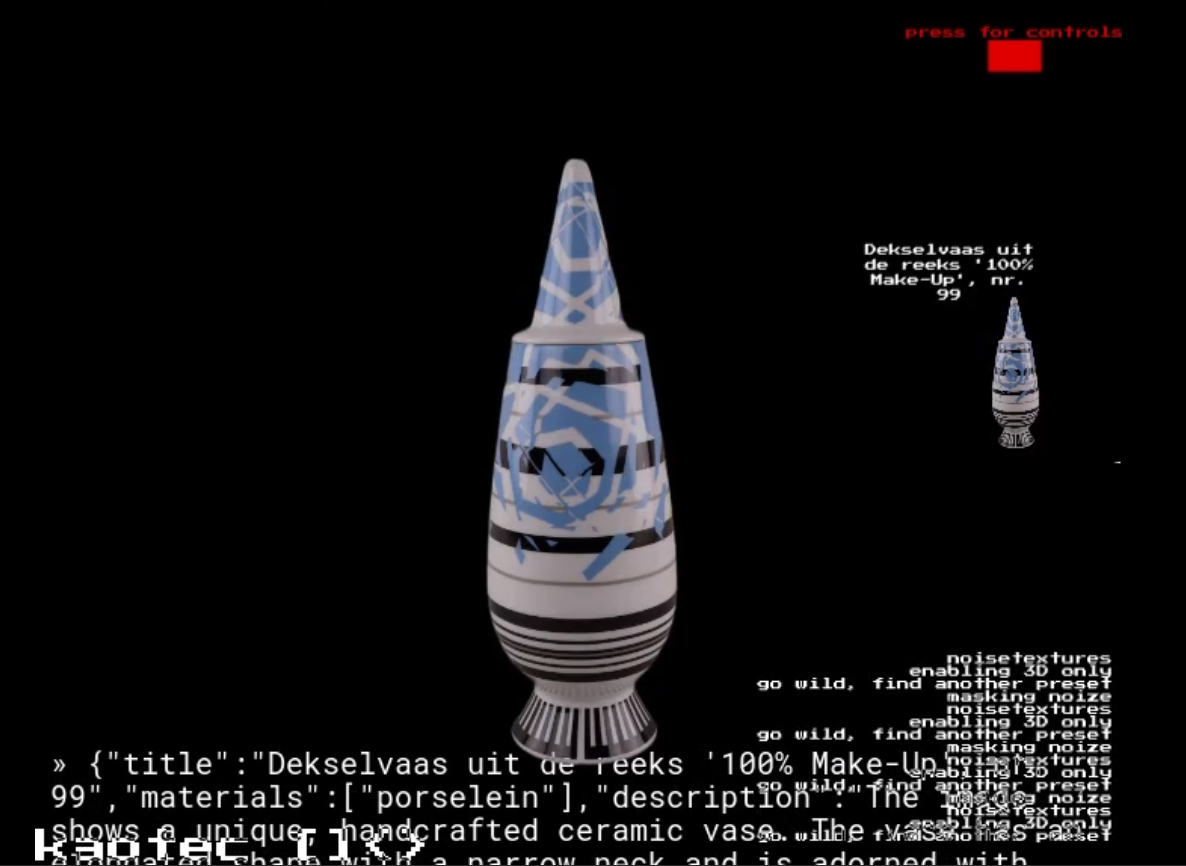

The project Lost In Diffusion by artist/coder and engineer Kasper Jordaens, is an attempt to better understand the role of algorithms, and AI models in relation to a museum collection. AI has proven supportive in turning archival data into accessible, interconnected, and intelligible information. However, its potential in "generating" new data in meaningful ways is a domain largely left untapped. Given access to our collection database, containing both metadata and images on the design objects represented in the collection of the museum, Kasper Jordaens, (re-)trained a diffusion model* in an attempt to make the machine understand not only the intricacies of design, as defined through the (diffuse) scope of the collection, but also to generate a model that could potentially act as a co-agent, next to the designer, in the process of design.

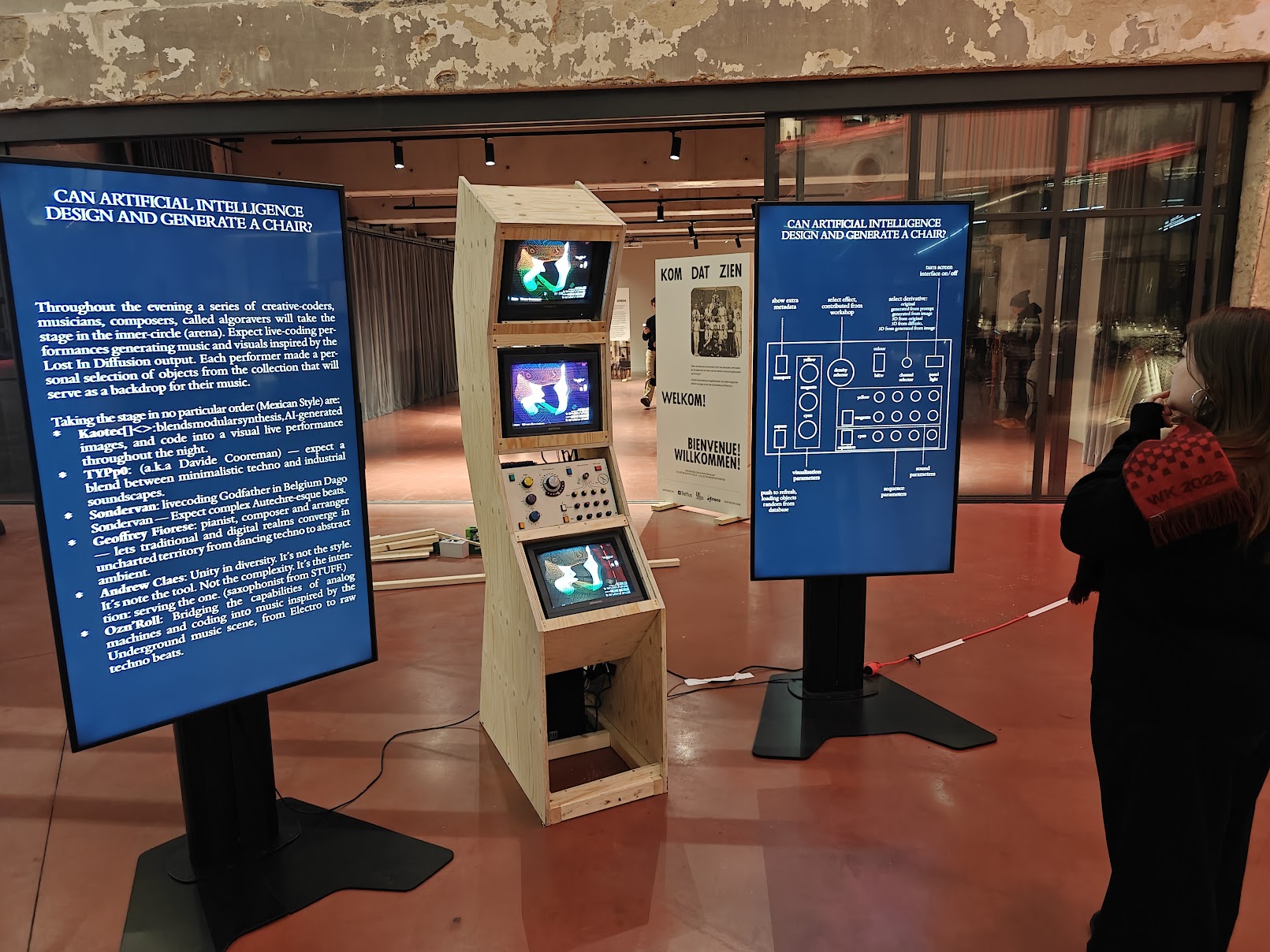

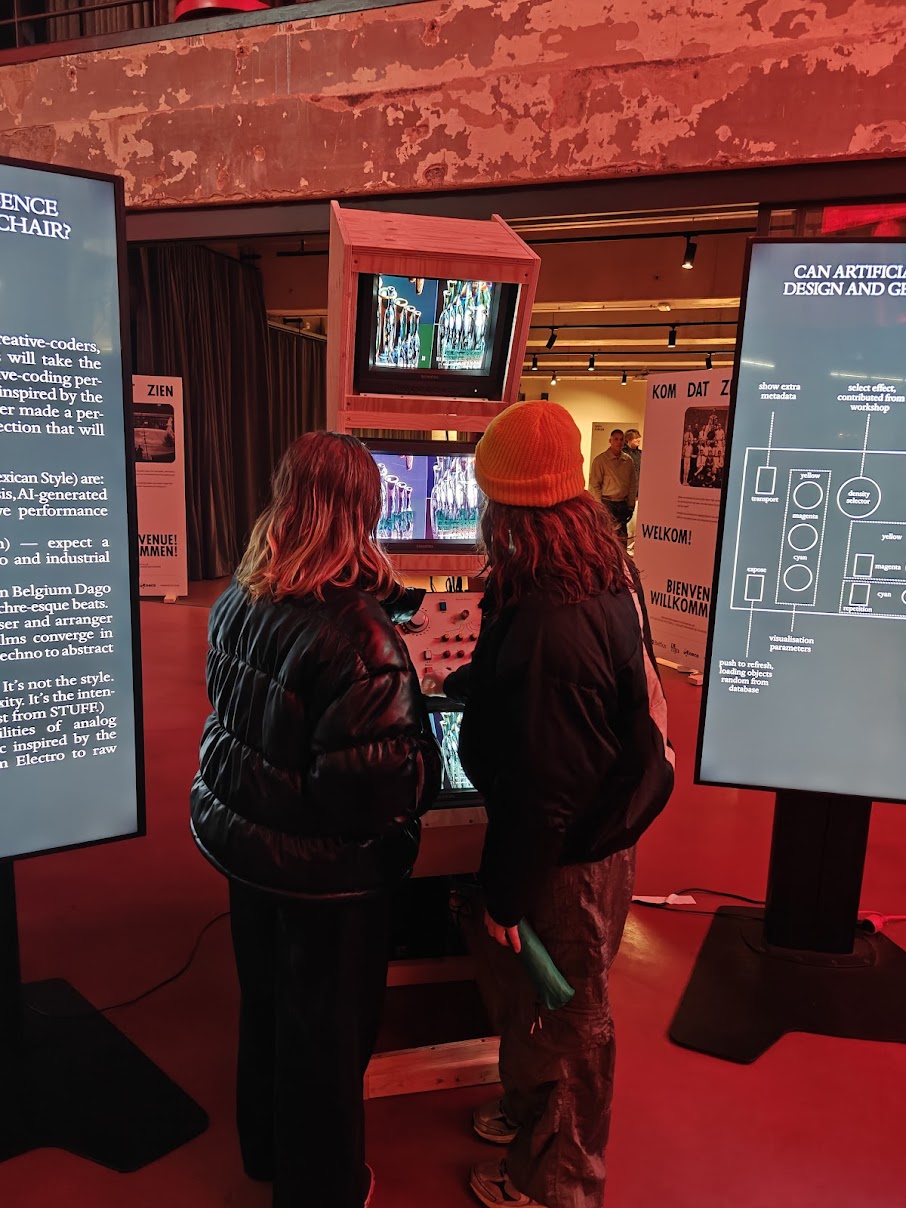

Putting this model to the test, the results are shown during Museumnacht both in the form of an interactive kiosk. which allows the visitor to explore parts of the museum collection using results from the workshop at Mutation Festival. But also as data input for the visual backdrop of the Algorave, a live performance where creative-coding, music and graphic design intersect.

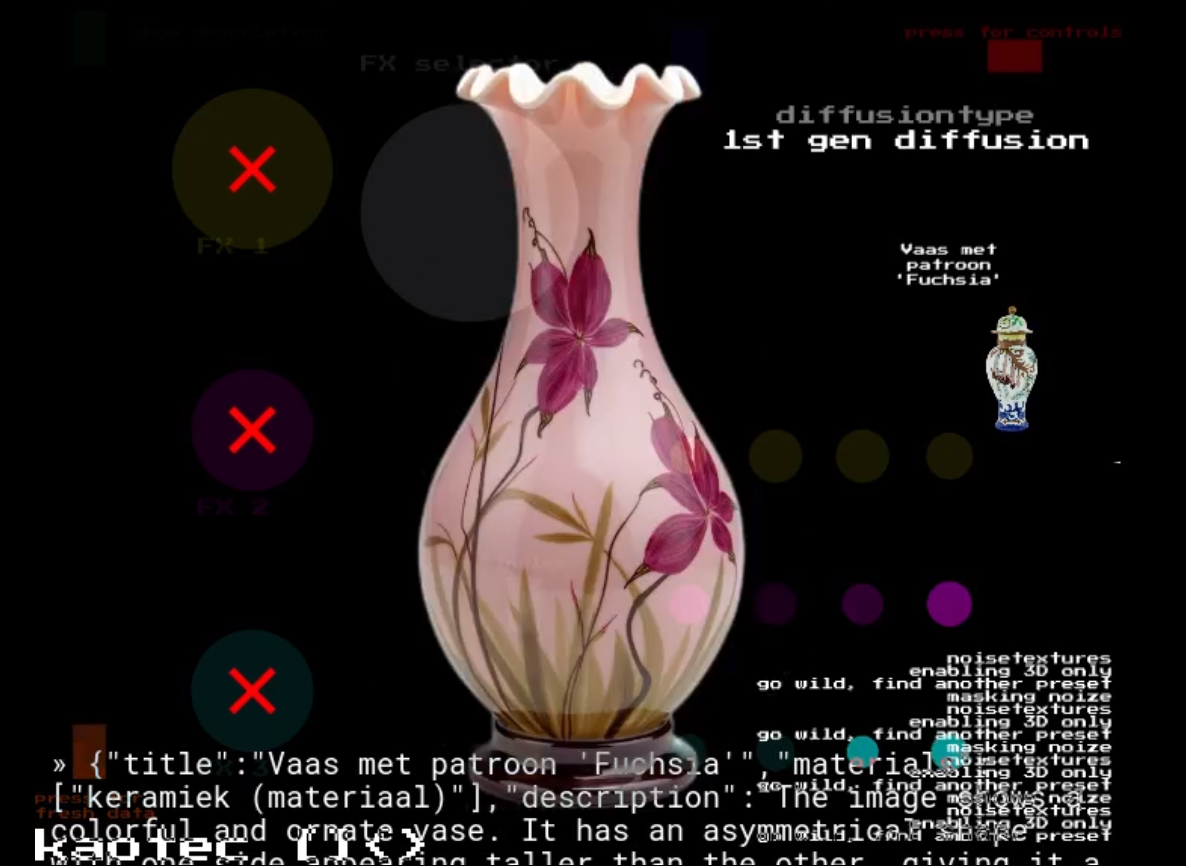

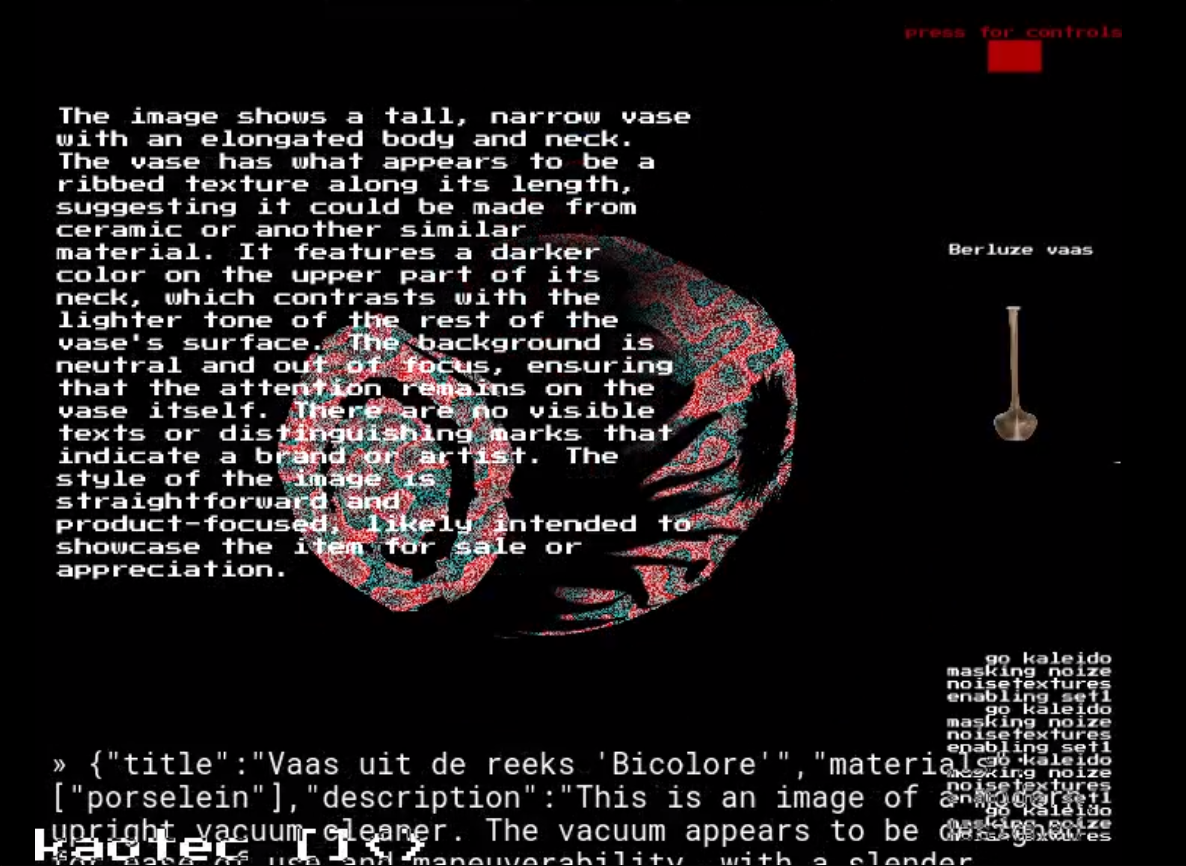

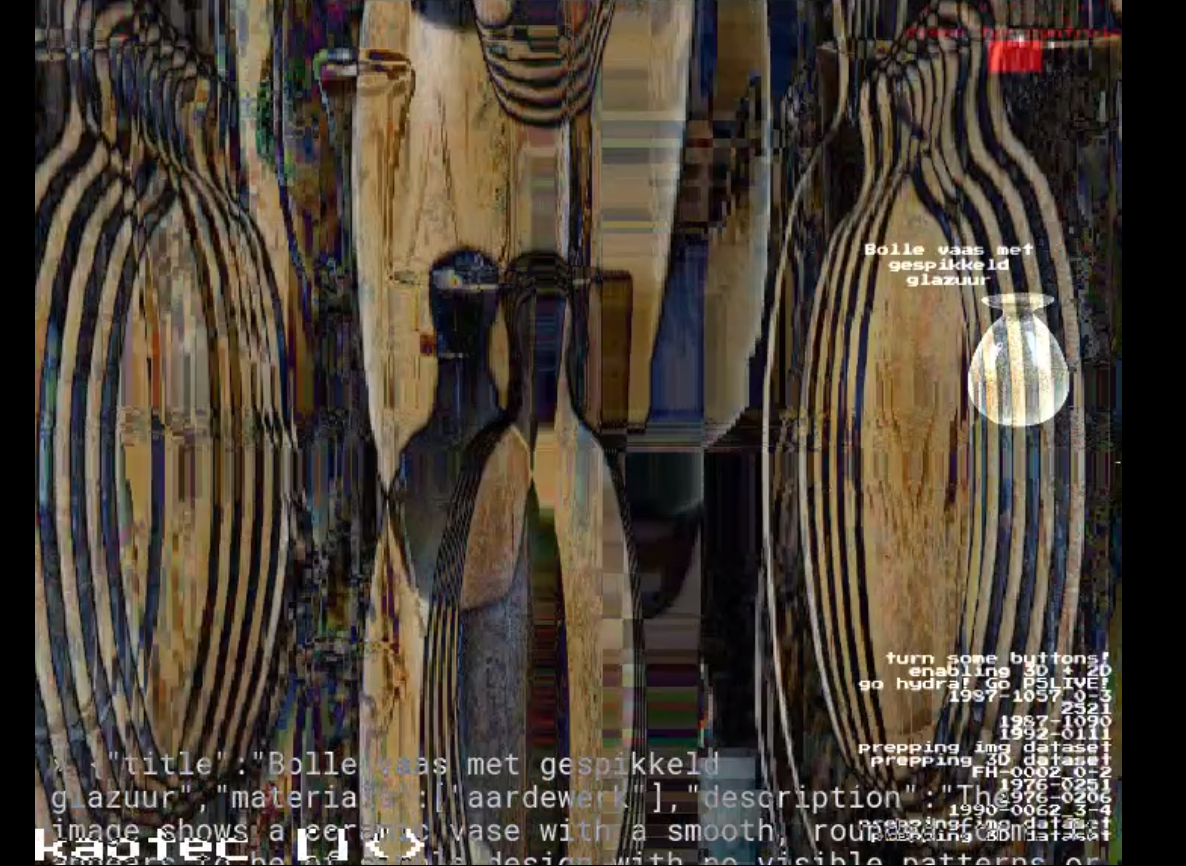

the collection explorer

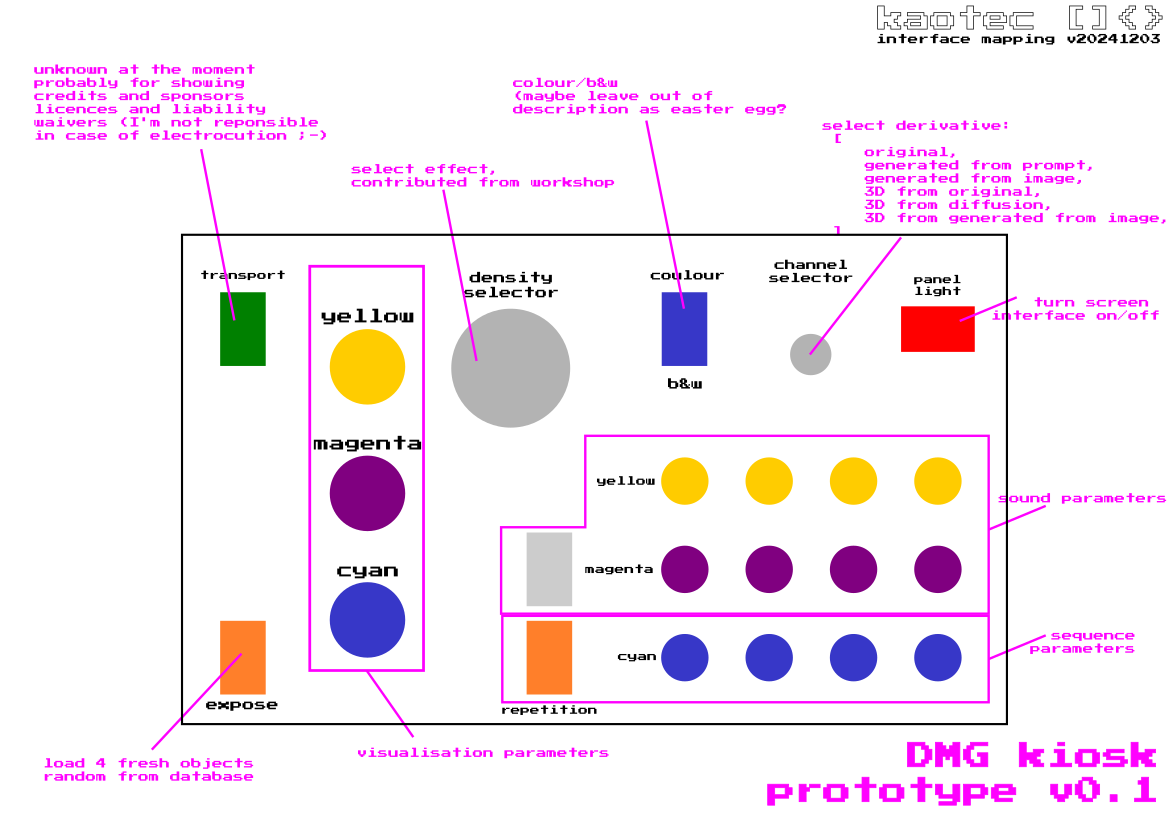

The resulting collection can be explored in a playfull way using a purpose built kiosk, containing three CRT screens and a controller built from found hardware. While exploring the collection, the installation is also creating sound. The athmospheric and sometimes rhythmic sound invites the user to keep exploring. Effects can be added to images of the collection. These were contributed by participants of the live coding workshop at Mutationfest.

Below are some impressions of the machine, it was a purpose built kiosk, and a version 0.1 of the museumdata explorer, a WIP, but fully operational. The controller is a revamp of the pumpkinmaster3000 (served in pumpkin orchestra and data intersect study), now expanded with a Raspberry Pi to run the realtime visuals.

images/video

the process

For more insights in how I processed the data, have a look at the lost in diffusion page

Thanks Olivier Van D'huynslager and the team from Design Museum Gent for this great collaboration and opportunity.